Human-Robot Dialogue System

Human-Robot Dialogue System

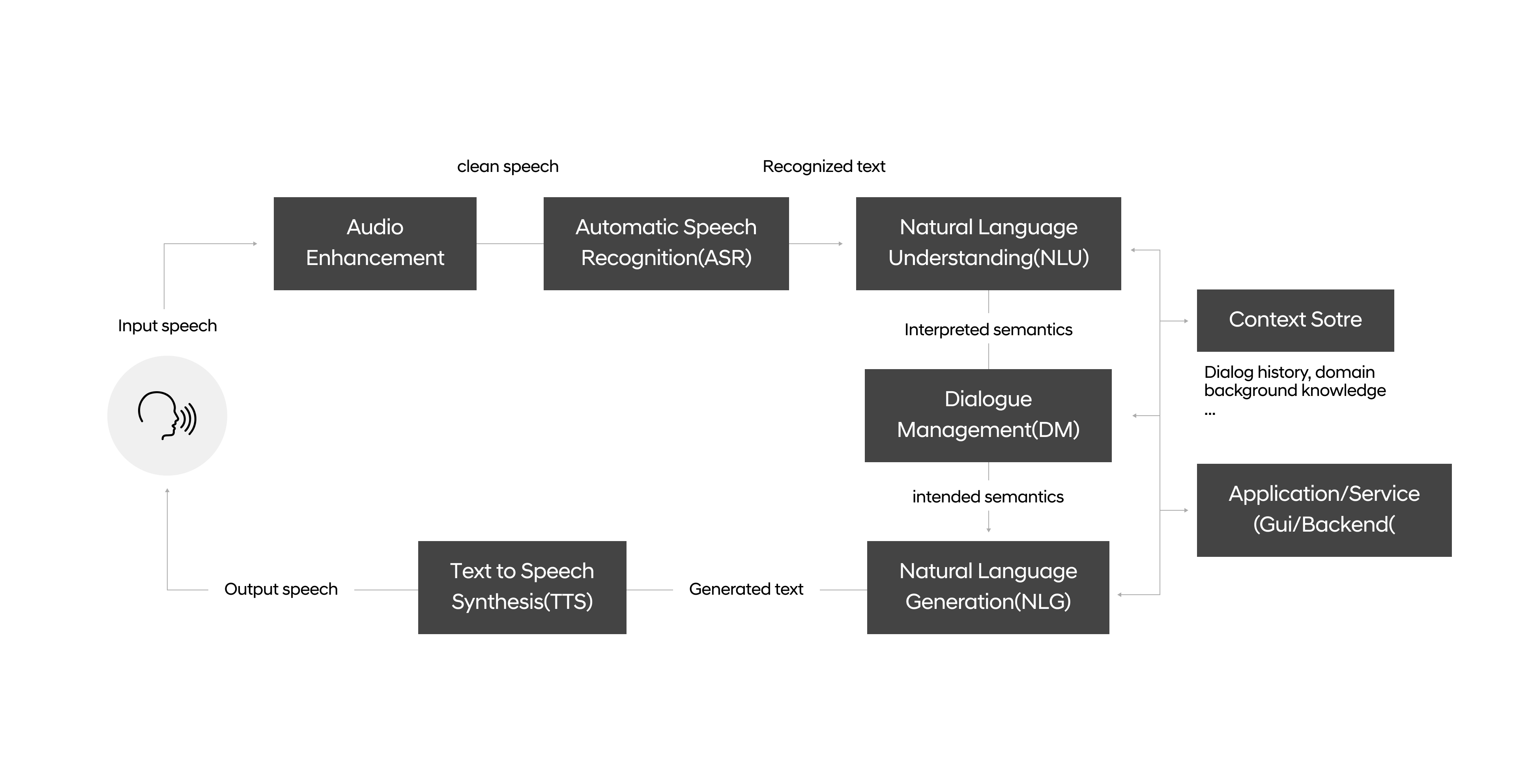

This voice-based conversation system is a state-of-the-art system that functions by closely integrating several technologies based around different elements of user feedback such as refinement/reinforcement of users’ speech input (audio enhancement), speech and intent recognition (ASR/NLU), establishment of conversation for achieving the input goal (DM), response generation (NLG), and text-to-speech (TTS).

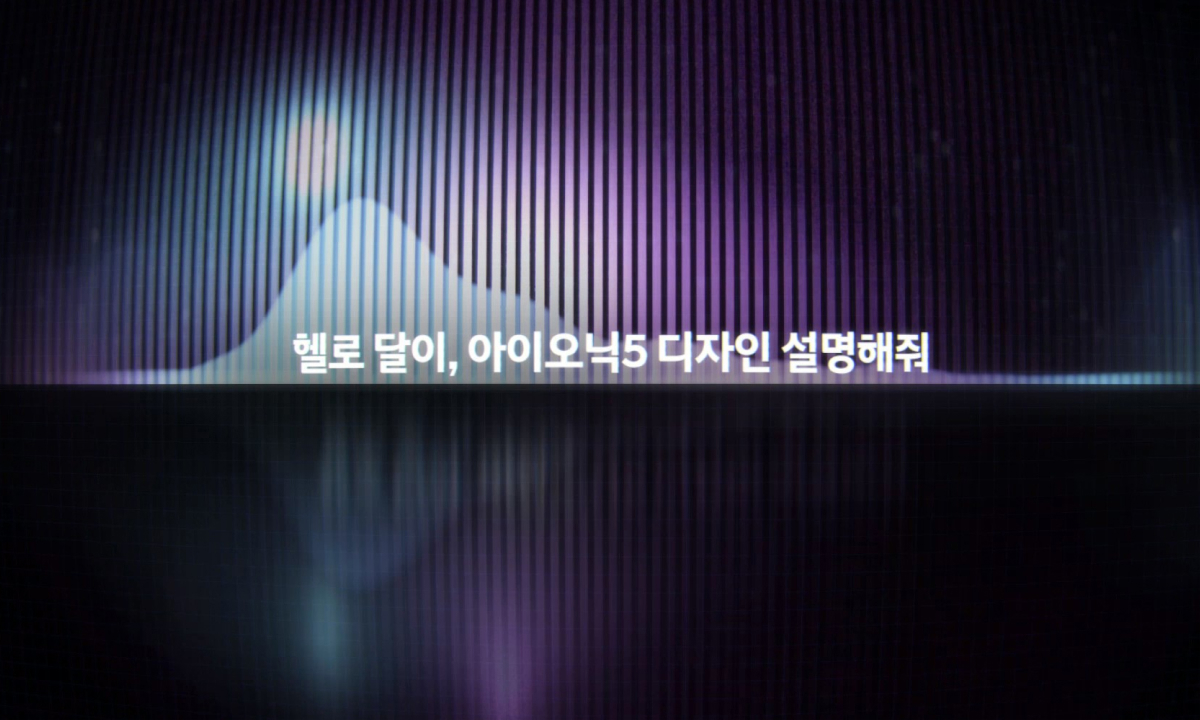

In particular, the conversation system in robots is an important method through which a robot can interact naturally with users using its own unique persona. As such, we at Robotics LAB are focusing on researching speech preprocessing that can reliably recognize a user’s input in any environment, broad conversation processing to respond to various user input, and text-to-speech technology that is able to express a robot’s character.

[Structure of the Voice-Based Conversation System]

1. Technologies Related to Speech Preprocessing via Deep Learning Technology

At Robotics LAB, we are carrying out research in various fields such as direction estimation, noise cancellation, etc. to ensure seamless interactions between robots and humans in various real-life environments.

Meaningful traits can be extracted, compared, and predicted from sounds obtained by a robot to obtain results according to the features of each model, using CNN, RNN and attention-based models to learn and make inferences. Moreover, in order to respond to a wide range of service environments, acoustic data augmentation such as random noises, delays, reverberation, etc. is being researched.

Acoustic Echo Cancellation & Noise Reduction model

This feature eliminates echos that are detectable by robots as well as noise that is not detected but can be predicted by the robots depending on the service environment. To simultaneously increase the sound quality of speech data and the recognition rate of the recognition model, we are researching PESQ, SI-SNR, WER, Accuracy, etc. to satisfy various indicators.

[AEC & NR Model Performance]

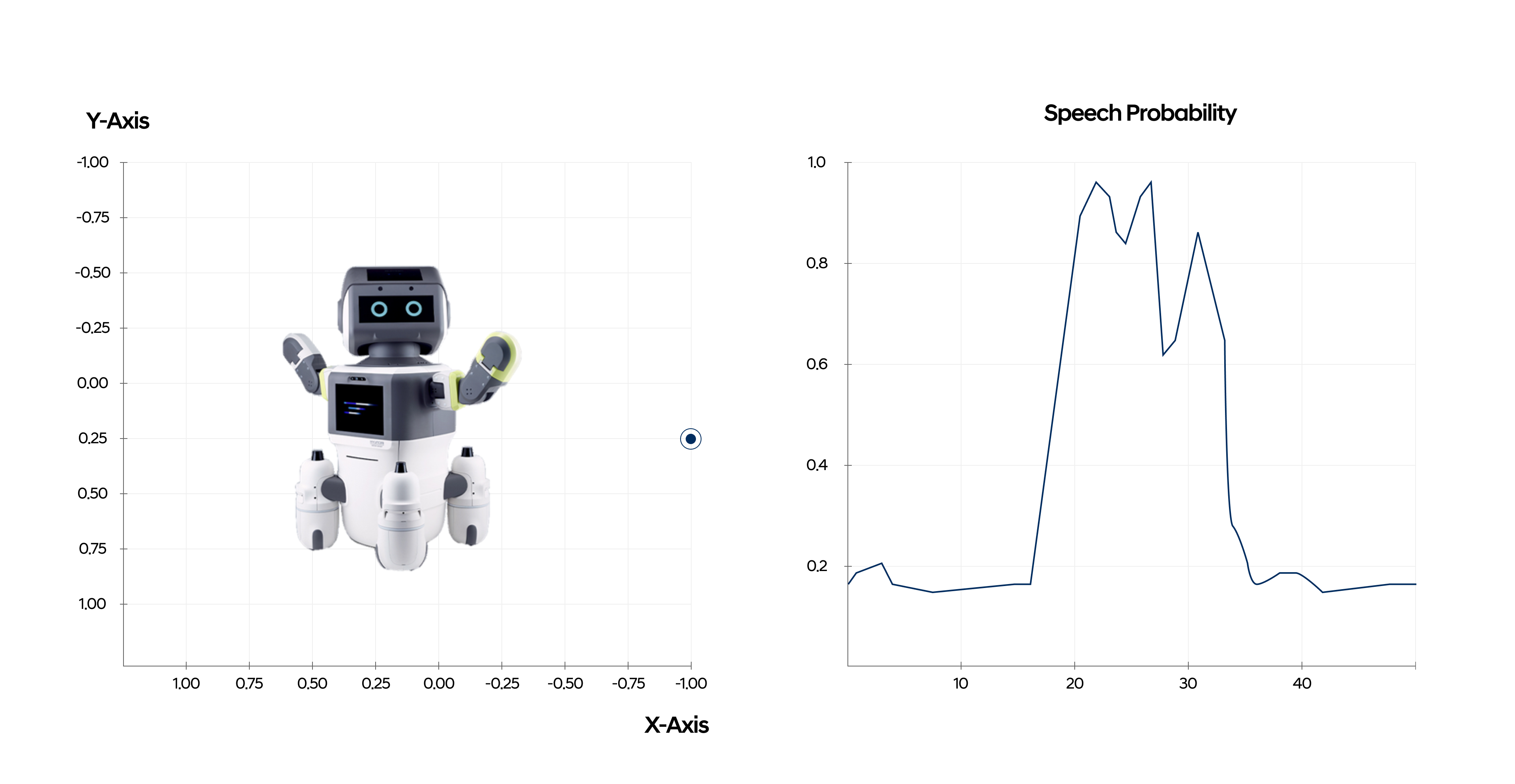

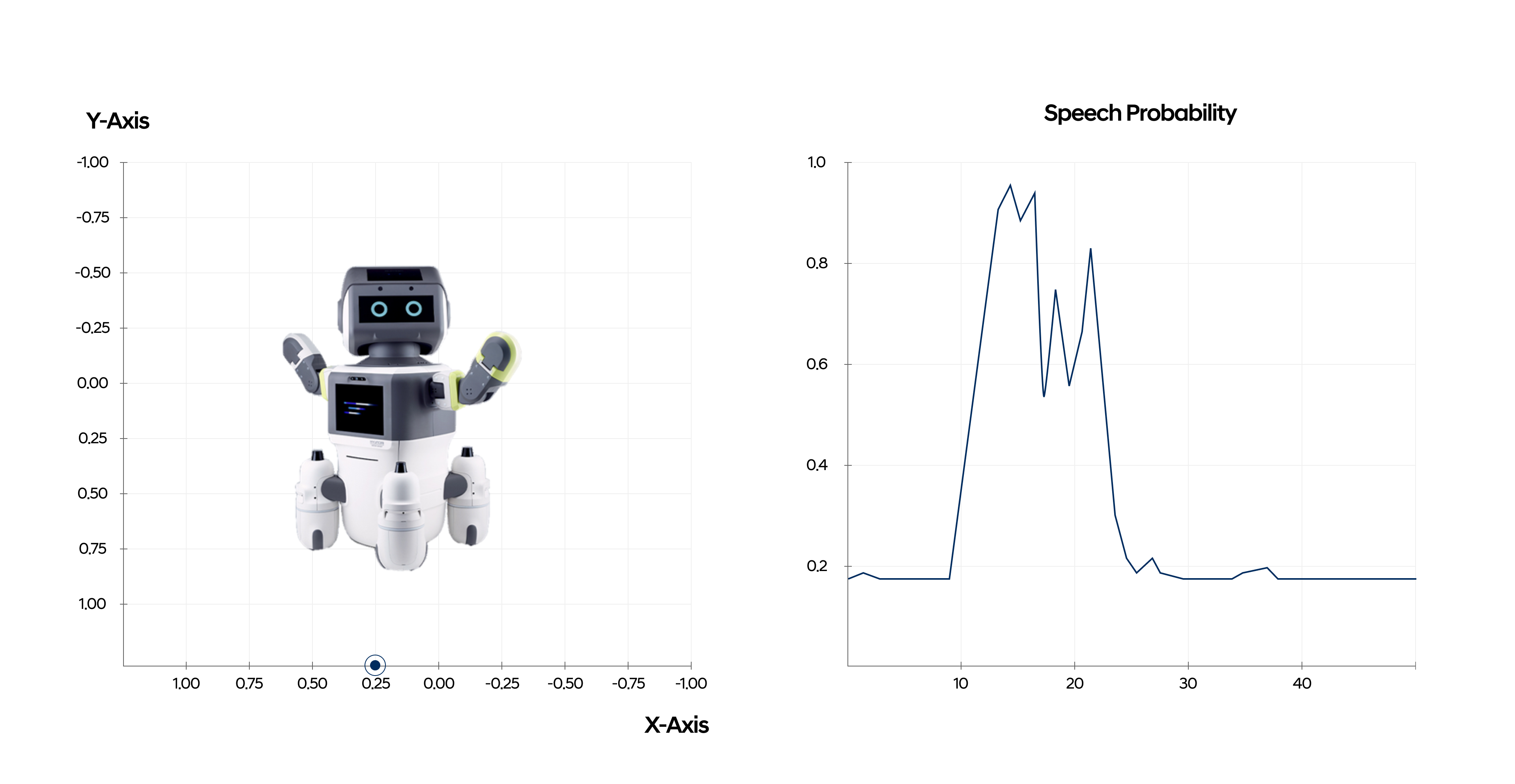

Speech Localization and Detection model

This feature serves to verify the location of the speaker or any sounds not detected by the robot’s camera or sensor. The front/back/left/right (Azimuth) and up/down (Elevation) direction information and speech location probability is calculated in real-time using multi-channel mics.

Case 1) Speech is made from the right of the robot

Case 2) Speech is made in front of the robot

Multi-modal research & Edge computing development

To ensure that the robot conversation service runs seamlessly even in severely noisy environments, visual data is used along with speech data to detect the speech period of the speaker. Technologies relating to visual and audio speech preprocessing such as noise speech improvement, etc. are being developed, and research for optimizing real-time operation is being conducted using various neural network accelerators to operate deep-learning models in robots.

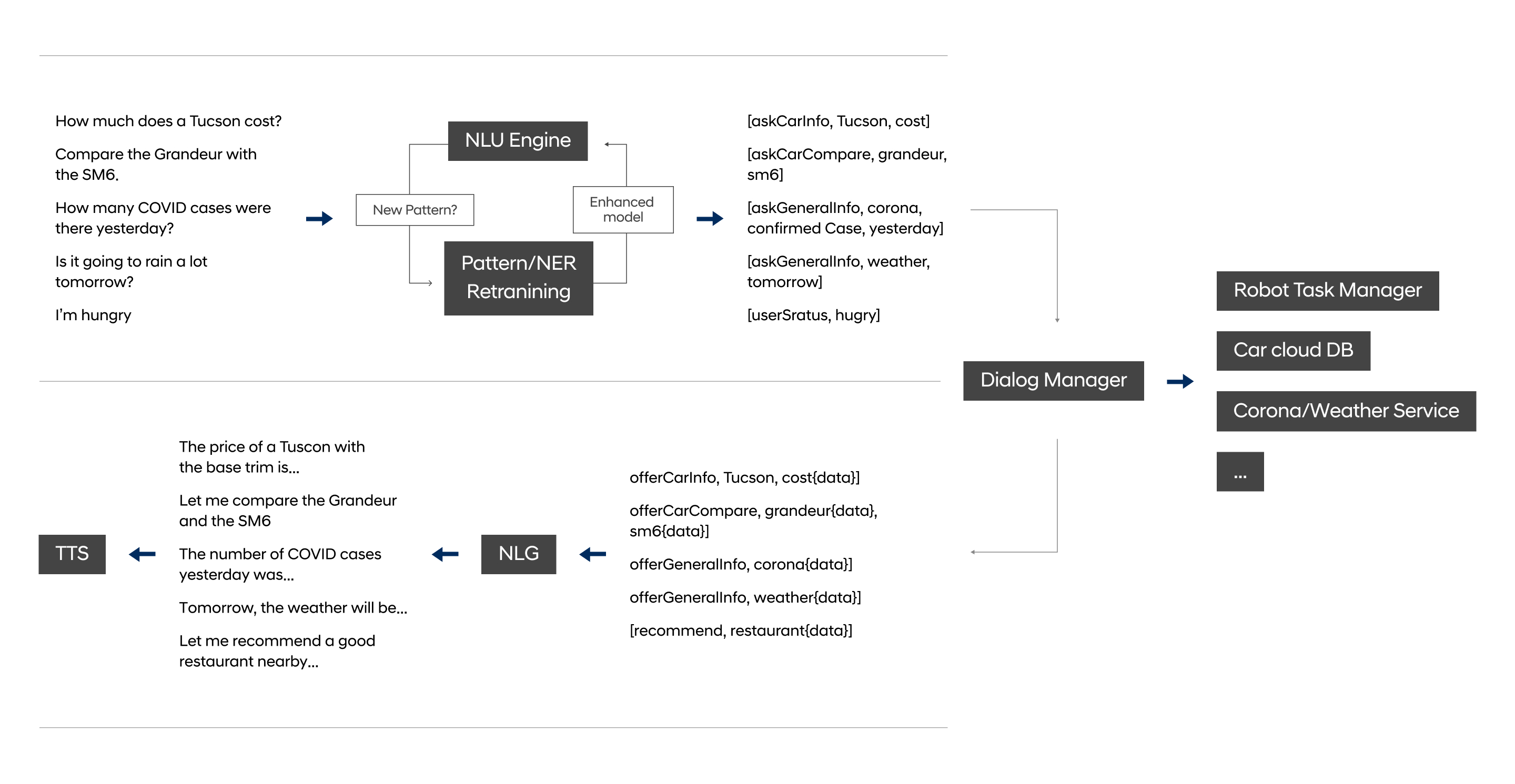

2. Speech Conversation System

The user speech input is generally analyzed as Intent and Entity through the Natural Language Understanding (NLU) engine. However, sometimes inputs are delivered without certain necessary situational information that is not expressed through speech, or the inputs are delivered in an unclear format due to the user’s speaking habits.

Robotics LAB converts this user input into task-oriented Intent and Entity to create commands that are executable by the robot by improving the entity recognition of the NLU module and utilizing the Dialog Manager. The completed user input provides the user with the necessary information through Natural Language Generation (NLG) and text-to-speech (TTS). A robot chatbot system that utilizes external platforms such as KakaoTalk is also being developed, along with a fun and easy-to-use dialog system for various platforms.

[Speech Conversation System Workflow]

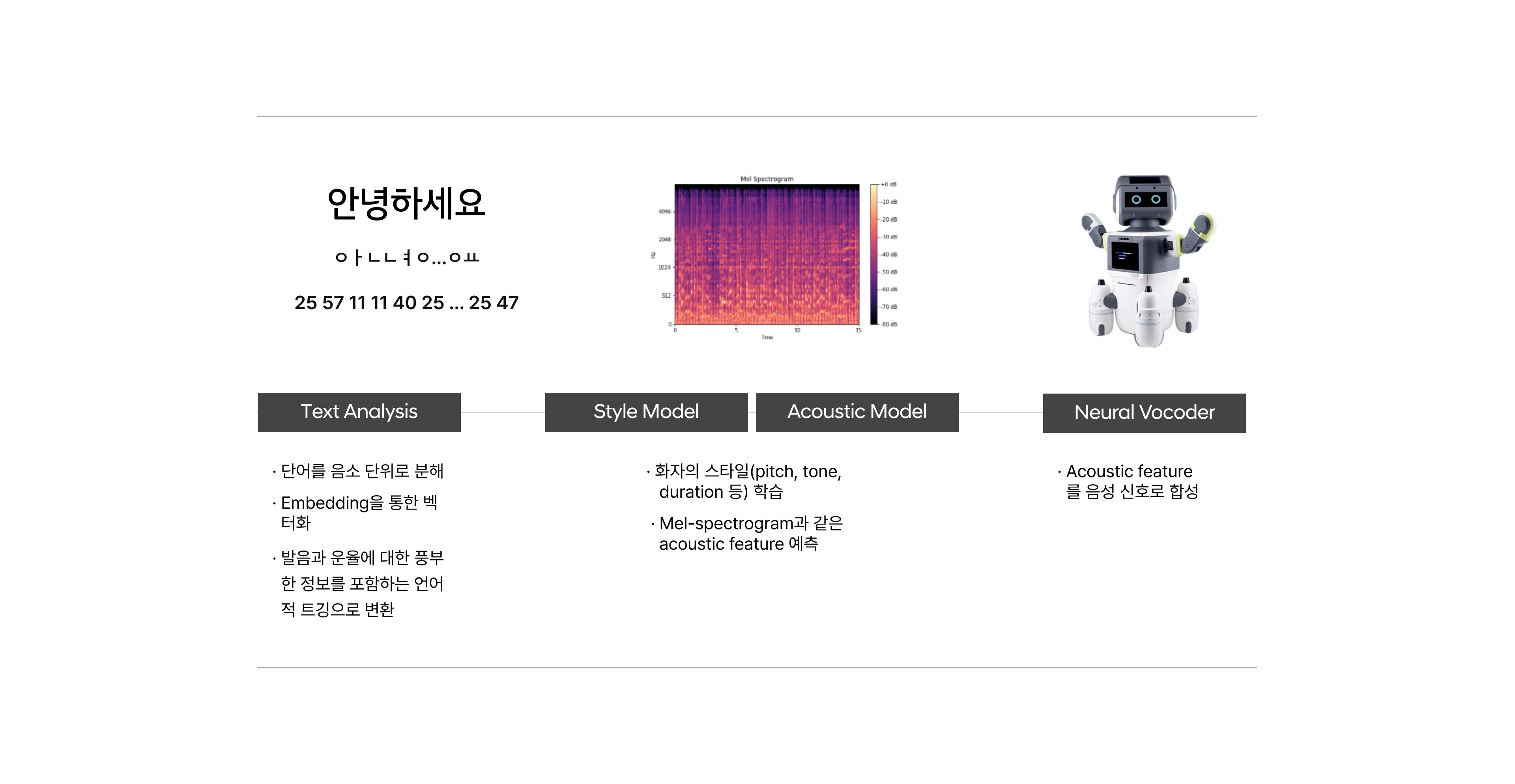

3. Text-to-Speech (TTS)

Text-to-Speech (TTS) is a technology that analyzes text to generate speech similar to that of a human. At Robotics LAB, the input text is broken down into phonemes, after which the sentences are analyzed in a way that considers pronunciation, intonation, etc. (text analysis). The speaker’s speaking style (pitch, duration, tone, etc.) is then learned via the analyzed sentence. Transformers and other modules consisting of Seq2Seq (Sequence-to-Sequence) and Attention are used to predict the spectrogram (Acoustic Model).

Then, speech data (Neural Vocoder) based on this is generated through the Vocoder. With this technology, dynamic TTS that operates through the expression of emotion can be implemented for robots, providing a fresh and enjoyable experience for customers.